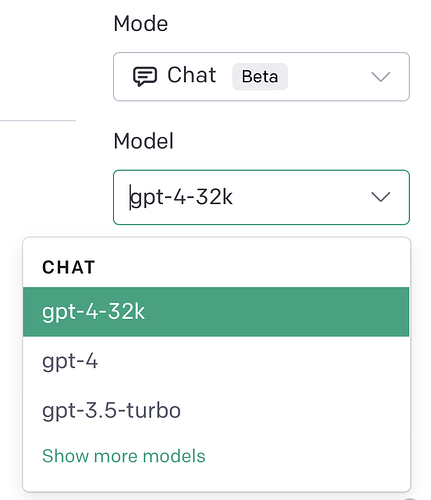

ChatGPT GPT-4 32k Upgraded Memory Rolling Out

Chatters about ChatGPT GTP-4 32k have been buzzing for over a month now. As people eagerly await its broad release, there are claims that GPT-4 32k is a bigger leap than moving from GPT-3.5 to GPT-4. This article will cover how this increase in token limits can unlock a wide range of new functionalities.

Early Access to GPT-4 32k

Some Insiders and influencers have already gotten access to the highly anticipated GPT4 32k upgrade rollout. There are several community posts about it and even more on Twitter.

The Importance of Token Limits

Token limits refer to the maximum number of tokens an AI model can process or generate in a single input or output sequence. Tokens are units of meaning representing words, subwords, or characters; they serve as the building blocks for natural language processing models.

Increasing the token limit has three main benefits: computational constraints, memory limitations, and improved response quality. With more tokens to process and generate text, AI models like ChatGPT GPT-4 32k can perform more challenging tasks while maintaining coherent responses.

What GPT-4 32k Offers

The move from a limit of 8,000 tokens in GPT-4 to an astounding 32,000 tokens with GPT-4 32k promises numerous improvements over its predecessors. Simply put, GTP-4 32k means it can support roughly 25,000 words, or 48 pages of text.

Some key potential advancements include:

1. Longer Text Analysis

With the higher token limit, AI models can analyze entire books, research papers, contracts, and other lengthy documents without breaking them into smaller chunks.

2. Improved Context Retention

The larger token limit allows the model to retain context better over longer input sequences. As a result, AI systems like ChatGPT can store more information about topics for greater accuracy.

3. Enhanced Summarization and Translation

Models like GPT-4 32k can use the longer input sequences to generate improved summaries as they can process more context from longer texts. This also applies to translations, resulting in more accurate and coherent translations.

4. Better Conversational AI Performance

Chatbots and other conversational AI systems will benefit from the larger token limit, allowing for more extensive dialogue while maintaining context throughout the conversation.

5. Advanced Creative Writing and Content Generation

Increasing the available tokens means AI can generate longer pieces of text for creative writing tasks such as articles, stories, or full-length novels.

Is GPT4 32k better than 8k?

GPT-4 has two variants with different token limits: GPT-4-8K and GPT-4-32K. GPT-4-8K can handle 8,192 tokens, while GPT-4-32K can process 32,768 tokens. In terms of words, GPT-4 can handle up to 25,000 words.

Here’s a comparison table:

| Model Variant | Token Limit | Approximate Word Limit |

|---|---|---|

| GPT-4-8K | 8,192 | ~6,000 |

| GPT-4-32K | 32,768 | ~25,000 |

It is clear that many people are excited about the potential benefits of the new 32K rollout. While some users have reported issues accessing the new capacity, others have expressed enthusiasm about the improvements it will bring to content creation.

One specific example comes from a commenter suggesting using a Linkbot service to automatically build internal links for new posts. This kind of automation could save content creators much time and effort, making it easier to focus on the actual writing itself.

Another commenter recommends the MPT-7B tool, which has a token limit of 65K and can be run locally or on Huggingface. While not quite as good as GPT-4, tests show that other tools out there can offer similar benefits.

The increased memory capacity of GPT-4 is seen as a significant improvement over its 8K predecessor. While there may be some issues with access and implementation, the potential benefits of this upgrade are clear. Content creators can expect to see improvements in efficiency and productivity, which could ultimately lead to better content for their audiences.

What This Means for Developers

AI developers are thrilled about the possibilities GPT-4 32k brings to different use cases. Examples include applications like turbocharged programming, where entire projects’ worth of code can be processed more efficiently, and meeting summarization without breaking down conversations into smaller chunks.

For coding applications, GPT-4 32k could make it possible to write entire apps in a single API call and debug or add features rapidly. The increase in tokens is also anticipated to significantly impact personalized content generation – users could process dozens of full articles and receive customized news summaries.

Although moving to GPT-4 32k might result in higher costs per token, the increased functionality and potential for new applications far outweigh these concerns. As we await widespread release, it becomes clear that GPT-4 32k is just the beginning of what’s possible in AI language models.

Looking Ahead: Unbounded Possibilities

The future promises even more significant advancements beyond GPT-4 32k with research investigating techniques like Recurrent Memory Transformer (RMT), allowing memory retention for up to 2 million tokens. Though still in the research stages, this could unfold unparalleled language model potential and open up an exciting world of unlimited token limits. The AI landscape remains on a path toward continuous growth and improvement; GPT-4 32k is just one stepping stone.